Publications and Projects

Dreamcrafter: Immersive Editing of 3D Radiance Fields Through Flexible, Generative Inputs and Outputs

Authors: Cyrus Vachha, Yixiao Kang, Zach Dive, Ashwat Chidambaram, Anik Gupta, Eunice Jun, Björn Hartmann (2024)

We present Dreamcrafter, a VR-based world building tool for editing 3D photorealistic Radiance Field scenes using generative AI in an immersive, real-time interface. It combines direct manipulation with different levels abstraction and controllability interfacing with generative image and 3D models, offering workflows for creating and modifying 3D scenes and staging scenes for video generative models. The system introduces a modular architecture, multi-level control options, and proxy representations to support interaction during high-latency operations, enhancing interactions in 3D content creation.

- Presented at UIST 2024 Poster Session

- Conditionally Accepted to CHI 2025

- Work primarily done during my 5th Yr EECS Masters at UC Berkeley (2023-2024) and prior planning in 2022

Instruct-GS2GS

Authors: Cyrus Vachha and Ayaan Haque (2023)

We propose a method for editing 3D Gaussian Splatting (3DGS) scenes with text-instructions in a method similar to Instruct-NeRF2NeRF. Given a 3DGS scene of a scene and the collection of images used to reconstruct it, our method uses an image-conditioned diffusion model (InstructPix2Pix) to iteratively edit the input images while optimizing the underlying scene, resulting in an optimized 3D scene that respects the edit instruction. We demonstrate that our proposed method is able to edit large-scale, real-world scenes, and is able to accomplish more realistic, targeted edits than prior work.

- Paper coming soon

- Nerfstudio integration supported

Nerfstudio Blender VFX Add-on

Author: Cyrus Vachha (2023)

We present a pipeline for integrating NeRFs into traditional compositing VFX pipelines using Nerfstudio, an open-source framework for training and rendering NeRFs. Our approach involves using Blender, a widely used open-source 3D creation software, to align camera paths and composite NeRF renders with meshes and other NeRFs, allowing for seamless integration of NeRFs into traditional VFX pipelines. Our NeRF Blender add-on allows for more controlled camera trajectories of photorealistic scenes, compositing meshes and other environmental effects with NeRFs, and compositing multiple NeRFs in a single scene. This approach of generating NeRF aligned camera paths can be adapted to other 3D tool sets and workflows, enabling a more seamless integration of NeRFs into visual effects and film production.

- Shown in CVPR 2023 Art Gallery

- Abstract on Arxiv here

- View more renders here

StreamFunnel

Authors: Haohua Lyu, Cyrus Vachha, Qianyi Chen, Balasaravanan Thoravi Kumaravel, Bjöern Hartmann (2023)

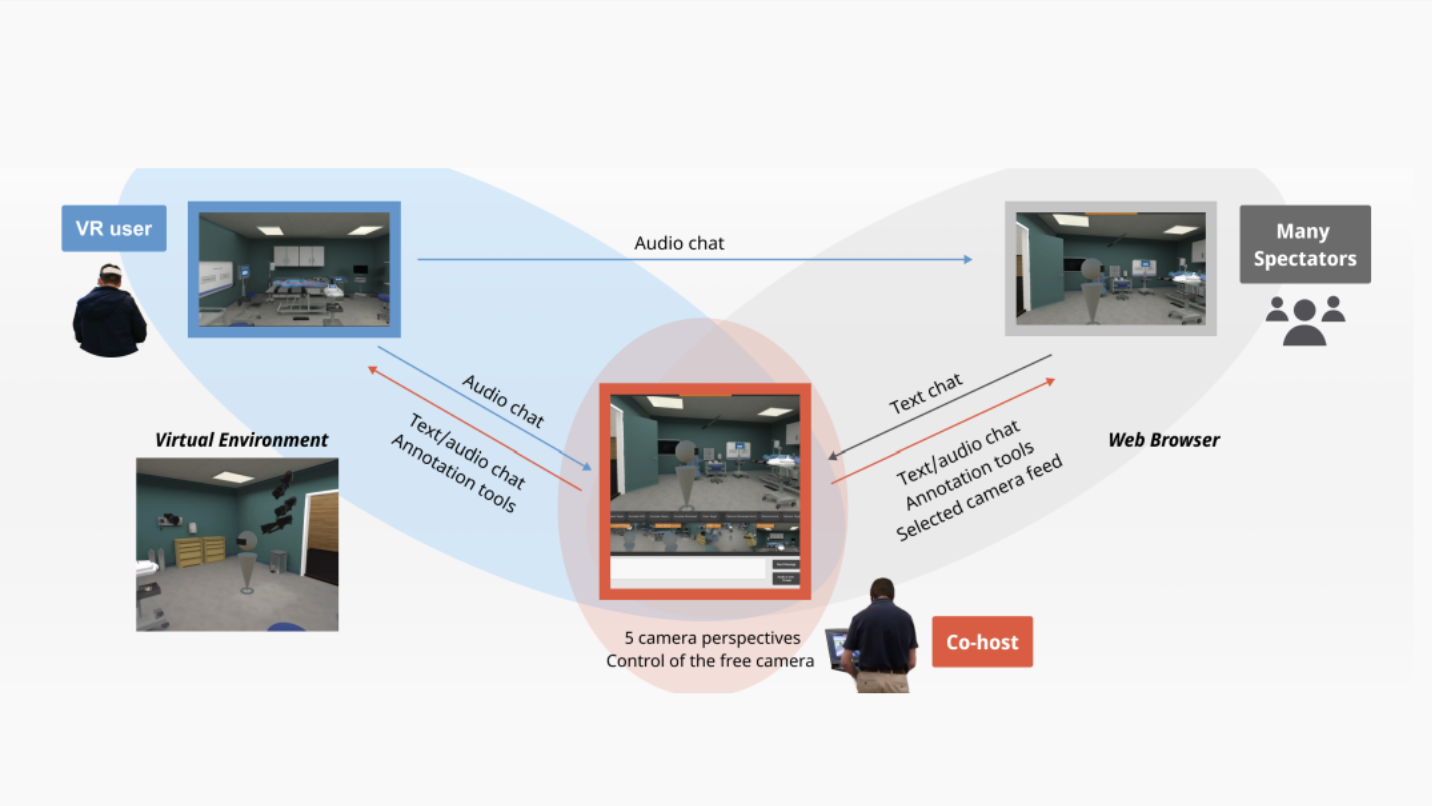

The increasing adoption of Virtual Reality (VR) systems in different domains have led to a need to support interaction between many spectators and a VR user. This is common in game streaming, live performances, and webinars. Prior CSCW systems for VR environments are limited to small groups of users. In this work, we identify problems associated with interaction carried out with large groups of users. To address this, we introduce an additional user role: the co-host. They mediate communications between the VR user and many spectators. To facilitate this mediation, we present StreamFunnel, which allows the co-host to be part of the VR application's space and interact with it. The design of StreamFunnel was informed by formative interviews with six experts. StreamFunnel uses a cloud-based streaming solution to enable remote co-host and many spectators to view and interact through standard web browsers, without requiring any custom software. We present results of informal user testing which provides insights into StreamFunnel's ability to facilitate these scalable interactions. Our participants, who took the role of a co-host, found that StreamFunnel enables them to add value in presenting the VR experience to the spectators and relaying useful information from the live chat to the VR user.

WebTransceiVR (CHI 22)

Authors: Haohua Lyu, Cyrus Vachha, Qianyi Chen, Odysseus Pyrinis, Avery Liou, Balasaravanan Thoravi Kumaravel, Bjöern Hartmann (2022)

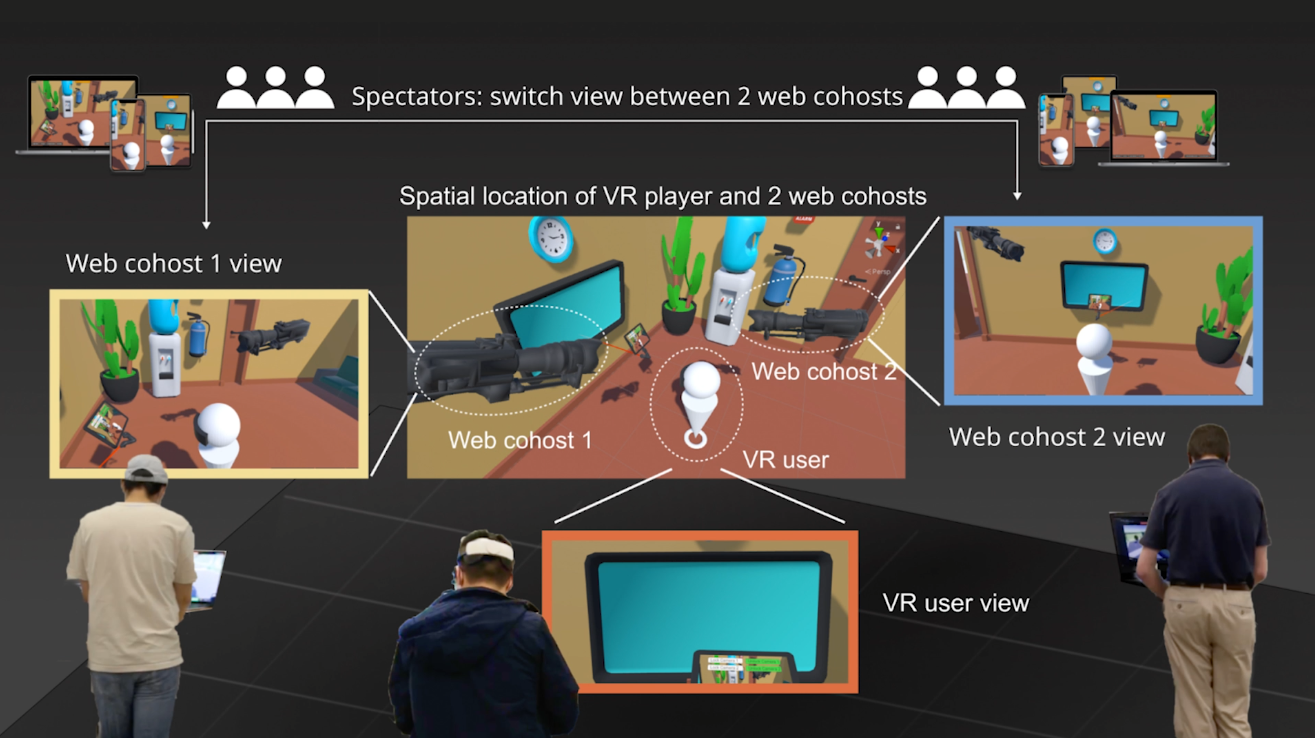

We propose WebTransceiVR, an asymmetric collaboration toolkit which when integrated into a VR application, allows multiple non-VR users to share the virtual space of the VR user. It allows external users to enter and be part of the VR application’s space through standard web browsers on mobile and computers. WebTransceiVR also includes a cloud-based streaming solution that enables many passive spectators to view the scene through any of the active cameras. We conduct informal user testing to gain additional insights for future work.

Future/Current Research Projects

I am wrapping up working on a project (Dreamcrafter) in editing synthetic Radiance Field environments in VR for my masters thesis. Progress update from 2023 can be viewed here.

I'm currently working on a project to create a 3D scene understanding system in XR generated by egocentric captures of a workspace and capturing user interactions allowing our system to leverage vision and language models through multimodal input and output to visualize and learn about the environment viewing query responses in 3D, audio, or other mediums, essentially capturing and interfacing with a physically grounded world model.

Nerfstudio Contributions

Since Jan 2023, I have been contributing features to the Nerfstudio system including the Blender VFX add-on and VR180/Omnidirectional (VR 360) video/image render outputs.